We all are influencing people every day. On a small scale (could you pass me the salt) and on a larger scale (choose me, my business or sales proposition). But how do you get people on your side who happen to believe the exact opposite of what you’re trying to convince them of? Or how do you get people to engage in behaviour that is better for them, communities or the planet if they don’t believe that behaviour is the right thing to do or too hard to perform?

Often, we resort to convincing people with information, arguments and reasons. However, behavioural science sheds an attractive light on how we, as humans process information and, foremost:

How you can make people not just willing to change but also willing to consider what you have to say needed for that change?

We first have to understand the information context of the people we are trying to influence. What do we need to take into account when we want to get our information across? To say the least, we live in an interesting information age. News and messages come to us in many ways, but not all ways are created equal. This is the day and age we all are trapped in filter bubbles [1]. The digital ecosystem and algorithms tailor our information supply to our existing views with a preference to extremist viewpoints, creating more and more distance between different perspectives and a greater social divide.

Confirmation Bias: The godmother of information processing

Before we can understand how information is processed, we have to realise that we as human beings all suffer from so-called confirmation bias. We process information to confirm what we already think or believe. In other words, we assign greater value to evidence that favourites our beliefs than we value new points of view. Whether that information or evidence is true or false doesn’t matter, the post-rationalisation capacity of our brain helps us feel good about our viewpoints.

We, as humans, are champions in justification after the occasion.

However, the tailored information technology and confirmation bias combined can cause systemic effects that can be pretty troublesome. People who hold strong opinions on complex social issues are likely to examine relevant evidence in a biased way. This can cause us to be firmly entrenched in our beliefs. We are creating polarised societies that show little willingness for cooperation or empathy instead of narrowing disagreements which is so much needed to solve society’s challenges of today.

Let me give you an example of how strong confirmation bias can be. An experiment [2] run by researchers at Stanford University proved that even scientific facts would be dismissed if they don’t match our existing beliefs. The researchers invited both opponents and proponents of the death penalty. Both groups were divided into two, getting a different conclusion from scientific research on the death penalty’s effectiveness. Opponents reached either a research conclusion favouring the death penalty or a judgment opposing the death penalty. Proponents also got either the in favour or against decision. These research conclusions were as follows:

Research conclusion in favour of the death penalty:

Kroner and Phillips compared murder rates for the year before and the year after adoption of capital punishment in 14 states. In 11 of the 14 states, murder rates were lower after adoption of the death penalty. This research supports the deterrent effect of the death penalty.

Research conclusion opposing the death penalty:

Palmer and Crandall compared murder rates in 10 pairs of neighbouring states with different capital punishment laws. In 8 of the 10 pairs, murder rates were higher in the state with capital punishment. This research opposes the deterrent effect of the death penalty.

Opponents of the death penalty have read the first message were strengthened in their belief: “The experiment was well thought out, the data collected was valid, and they were able to come up with responses to all criticisms.” but after having read the second message they didn’t shift beliefs but dismissed the study: “The evidence given is relatively meaningless without data about how the overall crime rate went up in those years“, “There were too many flaws in the picking of the states and too many variables involved in the experiment as a whole to change my opinion.”

It worked the same way around. Opponents of dismissing the death penalty who read the conclusions against the death penalty agreed: “It shows a good direct comparison between contrasting death penalty effectiveness. Using neighbouring states helps to make the experiment more accurate by using similar locations.”. Whereas the evidence in favour of the death penalty was dismissed: “I don’t think they have complete enough collection of data. Also, as suggested, the murder rates should be expressed as percentages, not as straight figures.” The research showed that:

People can come to different conclusions after being exposed to the same evidence depending on their pre-existing beliefs.

This bias is so strong that prior beliefs (also known as the prior attitude effect) made people even dismiss scientific proof; the evidence only strengthened their beliefs and caused further polarization. Other research [3] found an interesting addition to this conclusion:

People accept ‘confirming’ evidence more easily and evaluate disconfirming information far more critically. It’s not a fair game.

Confirmation bias in interpretation and memory

We know now that confirmation bias steers how we interpret information: What we focus on, what we value and favour. But it also influences what we remember. We all suffer from selective memory or memory bias [4]. For instance, schema theory has shown that information confirming our prior beliefs is stored in our memory while contradictory evidence is not [5]. This is also where stereotyping has its roots.

People also tend to remember better expectancy‐confirming (versus expectancy‐disconfirming) information about social groups [6]. It is also good to know that:

Confirmation bias does not only affect our individual decision-making; it also affects groups. We, as humans, are social animals, we interact, and we want to belong. However, our need to fit in makes us adapt our views to the views of the group.

We seek recognition by streamlining our position. This creates a tendency to produce groupthink. Our need for conformity shuts out the consideration of different points of view and rules out exploratory thought [7], which, in return, can negatively influence the quality of group decisions.

Why does confirmation bias happen?

Confirmation bias happens as it helps us. Our brain is constantly trying to lower our cognitive load. It does so by using short-cuts, also known as heuristics, to interpret the information we are faced with, for example, by using our past experiences, the social norms or our instinct. Taking in new information, evidence, facts and figures use energy. Confirmation bias is a perfect way to scan through information more easily.

This means that we as humans never take a fully informed decision; we automatically choose the path of least resistance and rely on short-cuts.

But there is more. If you have a firmly held belief, it is part of your identity. Sticking to that belief helps us in maintaining our identity or self-esteem even [8].

Switching almost feels we didn’t make an intelligent decision the first time, so we’d better stick to what we hold true before. I guess we have all experienced it ourselves that it can be rather painful to admit your strongly held belief was mistaken. Switching hurts; admitting we were wrong is not one of our favourite things to do. This doesn’t mean we can never convince someone that has different beliefs than us. We simply have to take confirmation bias into account.

Would you like to power up your team or project with behavioural intelligence?

Feel free to contact us. We are happy to tell you more about our consultancy or academy. Helping you innovate, transform or grow levering insights from behavioural science in practice.

How to convince someone with different beliefs

How to convince someone with different beliefs

What we can learn from this is that if we want to convince someone who holds strong beliefs or doesn’t share the same beliefs about desired behaviour just yet, we have to consider two things:

First, we have to know where we are positioned. Looking at the decision we want someone to make or the behaviour we want someone to perform, do we find ourselves in someone’s zone of acceptance or rejection? In other words, how much distance is there between you and them?

A key to convincing people is to close the belief distance between you and them.

Second, we need to know is how strong are these beliefs? Feeling strongly about something narrows our zone of acceptance and widens our zone of rejection. In short, if you want someone to go along with you, you need to get a clear view of where you are at on the influence playing field.

Thirdly, identify the movable middle. Jonah Berger cornered this concept [9]. You have to realize (or accept) that getting everyone on your side is a tough battle to win. Haters will be haters. Or, differently put, people who are really on the belief extremes are extremely hard to budge. If possible, at all. People who are fiercely against abortion, climate deniers who think climate change is a hoax or conspiracy thinkers who are convinced Covid doesn’t exist, well, don’t waste your energy on them. The truth is, in every issue, there is a vast majority of people that aren’t sure yet. People whose zone of acceptance and rejection are somewhat balanced out. Think about swing voters, often a large group of people who decide on election day on who to vote. Often this group flips the coin. Therefore, these are the best people to target. The trick is not trying to influence everyone but those with moveable minds.

Our job is to decrease the distance between the people we are trying to change or convince and us.

Not by giving people more evidence or information. That will only activate confirmation bias; it will make people dig in their heels much deeper. We have to use behavioural psychology. So, how can we do this?

Bypassing confirmation bias (1): Find common ground

First of all, we have to see if we can find common ground. Let say you want people to actively engage in behaviour promoting sustainability. You might encounter sceptics, people who believe climate change isn’t all that bad. Instead of counterarguing with facts, first, find a belief you may both have in common. For instance, the belief that family is important. Beliefs in return are closely linked to motivations. The belief that family is important could be a stronger motivator for someone to do everything to ensure his/her children have the most carefree life possible.

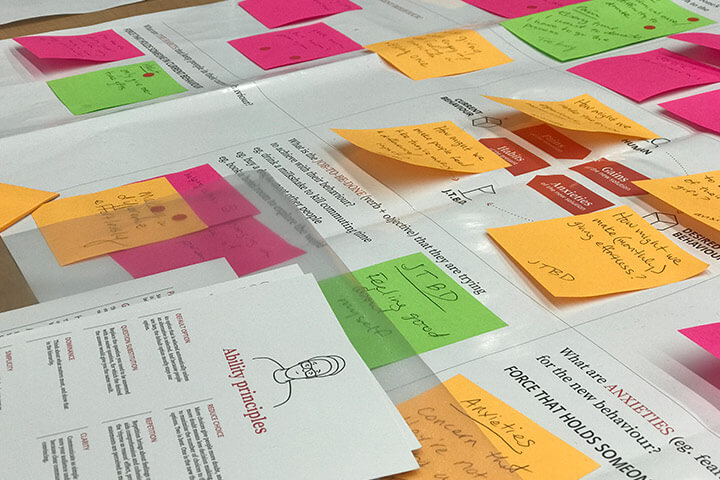

This is what we call a job-to-be-done: A deeper-lying motivation that drives behaviour. Something people want to achieve in their lives and for which they’re willing to take action, make decisions or engage in a behaviour. I might not like to make extra payments on my mortgage (behaviour), but I do want to take financial action to make sure I can still live in my beloved family home after retirement (JTBD). If that takes extra payments, so be it. It is pretty fascinating that even people with very different beliefs can have similar jobs to be done. It is there where you can find common ground and start building a bridge to close the distance between you. You could, for instance, use a principle from behavioural psychology called question substitution.

Let’s go back to the sceptics of climate change. Instead of asking them: ‘Do you want to engage in sustainable behaviour?’, you could instead ask them a different question: ‘Do you want to help build a healthy community for your children?’ That’s a far easier question for them to answer as it fits their beliefs about the importance of family. That to help create that family-friendly community, it (also) takes sustainable behaviours such as preventing littering in local parks, limiting car usage in the neighbourhood, buying locally grown produce, planting flowers that attract bees and so on, is the behavioural side-effect we were aiming for. This is how you can stretch the zone of acceptance of sceptics.

Want to learn how to apply behavioural science in practice?

Then the Fundamentals Course is perfect for you! You'll catch up on the latest behavioural science insights and will be handed tools and templates to translate these to your daily work right away. Learning by doing. We have created a brochure that explains all the ins and outs of the Fundamentals Course; feel free to download it here.

Bypassing confirmation bias (2): Provide proof not evidence

Bypassing confirmation bias (2): Provide proof not evidence

Let’s add on to the previous example. You are still dealing with climate change sceptics, and let’s say you need to design recycling behaviour. In short, you can say that people with strong beliefs need more proof before they are willing to change. However, proof isn’t evidence, nor are they facts, figures or arguments. Proof is what other people are doing.

We humans have a strong need for certainty. When designing a choice or behaviour, you have to realize that engaging in a new behaviour or making a new decision comes with uncertainty. It is new, so different, so uncertain. This makes our status quo bias and inclination to loss aversion kick in. We are simply afraid of losing what we have right now and take our current state (status quo) as our baseline or reference point for future decisions. Any change from that baseline is perceived as a loss. Inertia being the result. We really on short-cuts to see if a given behaviour or decision makes sense.

One of the strongest short-cuts our brain takes is looking at what other people are doing. We are wired as social animals. As children, we learn by watching others; we prefer belonging to the in-group, and as we have seen, we even adapt our beliefs to conform to a group. We addressed the possible dysfunctional decision-making capacities of a group; we can also leverage this human tendency to follow the beliefs and behaviour of others more positively. Simply by showing more people are showing recycling behaviour. We are providing social proof and activating the bandwagon effect [10].

We adapt our beliefs and behaviour because many other people do the same.

If you want this behavioural intervention to work, it is best to show more people showing the desired behaviour. If you only showcase one person, you might run into what is called a translation problem: ‘That person is not like me or someone I aspire to be, so why following his/her behaviour?’ We preferably follow similar others. That’s why when you are booking a hotel room online, you value reviews of people like you more than random others. If you’re a young couple, reviews of families with several kids are less relevant to you. However, in the absence of another you, quantity counts. Simply because it is harder to argue against more people.

Adding on to this, the more (different) sources say the same thing, the more social proof it provides. People need to hear from multiple sources to switch beliefs. Social proof also can work in our favour another way: It creates network effects. If we can get more people to change their minds, people around them may change their minds as well.

The question remains, how many people do you need to create network effects? The answer is; it depends. If you are dealing with weaker attitudes and beliefs, people don’t need proof from many sources. However, if you are dealing with more strong opinions, you need more sources to prove your point. How does this work in practice? Jonah Berger differentiates a sprinkler and a fire hose strategy. Or, differently put, a scarcity or concentration approach.

If you are trying to convince people to engage in sustainable behaviour on the outer sides of the moveable middle (so, more strongly leaning towards the zone of rejection), a concentration strategy is more effective. That is to say, focusing on a smaller group of people that you confront with proof in a short period, multiple times (the more time sits between proof, the less impact it will have). However, if you are dealing with people leaning towards the zone of acceptance, one or two people will be enough proof to others to shift their beliefs and mimic behaviour. In this case, you can provide less social proof and can focus on influencing more people at once.

Bypassing confirmation bias (3): Don’t ask too much

A final intervention from behavioural science is to tone it down a bit. Rome wasn’t built in one day; the same goes for behaviour change. We have seen that hard-wired, even often unconscious human tendencies instead make us favour inertia over change. So, getting someone to change overnight is hard if not undoable. Are we as humans not capable of change? We absolutely can! We change all the time (or are you still sporting your nineties hairdo and outfits?) Almost everyone has something they want to change. Only often the threshold for change is too high. When we want to convince someone to show a new behaviour, we often tend to ask too much at once. We underestimate that a new behaviour takes time, money, effort or energy. To lower the threshold for change, we should cut up end-goals into more minor, specific behaviours. What’s the difference? For example, an end goal can be living a healthy life. Specific behaviours that will help achieve this end goal are, for instance, drinking six glasses of water each day, buying vegetables on Saturday, doing two 20-minute workouts each week. These specific behaviours together will add up to the end goal. Furthermore, we need to understand that:

Behaviour is a process; if you can make someone commit to the process, change will happen.

There is a magical word in the sentence above: commit. Our brain loves simplicity. If you can make people commit to a first ask, they are likely to follow up on it. If you said yes to A, doing A is the easiest thing to do. It simply feels logical and requires no further cognitive effort. This is known in behavioural science as the commitment/consistency principle. The trick is to start with a small ask instead of a big question. To link back to what we’ve learned before, start with a small ask on common ground. Find that place of agreement that helps you build an initial connection.

Let’s go back to the climate change sceptic who holds his family so very dear: Don’t ask them to live more sustainably as of now. For instance, ask them to hand in their old paper at their children’s school. Put a recycling container next to the school playground. Make it easy (they are there to pick up their children anyway). Make it relevant for them (their motivation is to give their children the best living conditions, so putting away paper in a container next to the playground is an unconscious reminder of a way to keep their children’s playground clean).

But most importantly, by doing so, you have them commit to a first small ask. From this initial ask, you can then build on, slowly opening their zone of acceptance. Maybe even pivoting their initial beliefs on sustainability, giving you the manoeuvre space to provide them with more information on sustainable behaviour. Differently put: You have used Behavioural Design to prep their brain to be susceptible to both change and the information needed for that change.

Would you like to leverage behavioural science to crack your thorny strategic challenges?

You can do this in our fast-paced and evidence-based Behavioural Design Sprint. We have created a brochure telling you all about the details of this approach. Such as the added value, the deliverables, the set up, and more. Should you have any further questions, please feel free to contact us. We are happy to help!

Summary

Confirmation bias is the human tendency only to seek, focus on or favour information that confirms our existing beliefs. It is a strong bias as it operates pretty unconsciously in our brain and is catered by the filter bubbles we all find ourselves in. Confirmation bias strengthens our prior beliefs and makes societies more polarised. If you want to change the behaviour of people who do not share the same opinions, you don’t achieve this by giving more information, evidence, fact or figures. You accomplish this by closing the distance between you and the people you are trying to influence using Behavioural Design, taking human psychology and deep human understanding as a starting point. If you want someone to change, you first need to make people willing to listen to the information required for this change.

BONUS: free ebook 'Confirmation Bias: how to convince someone who believes the opposite'

Especially for you we've created a free eBook 'Confirmation Bias: how to convince someone who believes the opposite.' For you to keep at hand, so you can start using the insights from this blog post whenever you want—it is a little gift from us to you.

References

[1] https://fs.blog/2017/07/filter-bubbles/

[5] http://web.mit.edu/pankin/www/Schema_Theory_and_Concept_Formation.pdf

[7] Tetlock & Lerner (2002) in Schneider, ed. by Sandra L.; Shanteau, James (2003). Emerging perspectives on judgment and decision research. Cambridge [u.a.]: Cambridge Univ. Press. pp. 438–9.

[8] Casad, Bettina J.. “Confirmation bias”. Encyclopedia Britannica

[9] https://jonahberger.com/books/the-catalyst/

[10] Cherry, K. (2020, April 28). The Bandwagon Effect Is Why People Fall for Trends.

[11] SUE blog: Cialdini on persuasion

Blog header photo credits: Pawel Czerwinski

How do you do. Our name is SUE.

Do you want to learn more?

Suppose you want to learn more about how influence works. In that case, you might want to consider joining our Behavioural Design Academy, our officially accredited educational institution that already trained 2500+ people from 45+ countries in applied Behavioural Design. Or book an in-company training or one-day workshop for your team. In our top-notch training, we teach the Behavioural Design Method© and the Influence Framework©. Two powerful tools to make behavioural change happen in practice.

You can also hire SUE to help you to bring an innovative perspective on your product, service, policy or marketing. In a Behavioural Design Sprint, we help you shape choice and desired behaviours using a mix of behavioural psychology and creativity.

You can download the Behavioural Design Fundamentals Course brochure, contact us here or subscribe to our Behavioural Design Digest. This is our weekly newsletter in which we deconstruct how influence works in work, life and society.

Or maybe, you’re just curious about SUE | Behavioural Design. Here’s where you can read our backstory.

How to convince someone with different beliefs

How to convince someone with different beliefs

Bypassing confirmation bias (2): Provide proof not evidence

Bypassing confirmation bias (2): Provide proof not evidence